What Links Here?

Outbound Links

- ⭐ knatoms

- see

- ⭐ this-business-of-arrows

- arrows available in html

- Creative Commons License

- rule of least power

- Resource Description Framework "RDF"

- comparison of triplestores

- list of graph databases

- ⭐ spaced-repetition

- opportunity cost

- ⭐ spaced-repetition

- Metacademy

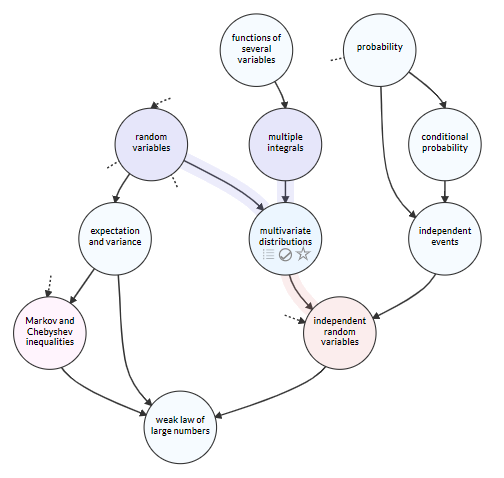

- Knowledge Graph example from Metacademy

- 🖼️ Knowledge Graph example from Metacademy

- Surfing Complexity

- ⭐ unbounded-recursion

- here

- "Rethinking Education"

- ⭐ paradox

- nextos

- Large Language Models explained briefly

- Learn Awesome: Computer Vision

- Metacademy

- On Metacademy and knowledge graphs

- Mathigon

- One Model

- Owleo

- Find Lectures

- Author info here

- Learn Anything

- Discussion of learn anything at hacker news

- Try "Learn Anything"

- WTF is a knowledge graph?

- Golden: The Intelligent Knowledge Base

- discussion here

- golden at golden

- The Skill Tree Principle: An Innovative Way to Grow your Skills Efficiently

- SkillUp

- LessWrong: Rethinking Education

- ASER: A Large-scale Eventuality Knowledge Graph

- Linked Data

- Microsoft Academic Knowledge Graph (MAKG)

- Wikipedia: Google Knowledge Graph

- Developer Roadmaps

- discussed here

- School Yourself: Algebra

- Contextualise

- discussed here

- Developer Roadmaps: Backend

- wikipedia: antimatroid

- skill-trees: Tasshin

- tess: counter argument against skill trees

- Piaget’s Theory of Cognitive Development

- wikipedia: experiential learning

- Youtube: Large Language Models explained briefly: from 3Blue1Brown

- ⭐ spaced-repetition

- ⭐ maths-education-games

- ⭐ interactive-text-ideas

- ⭐ this-business-of-arrows

Dependency Graph For Knowledge

Because everyone has a theory about the broken state of education

Can the knowledge or skills in a field be broken down into discrete atoms of knowledge?

If so, can we:

- Learn those atoms of knowledge (or atoms of skill)

- Assess our current knowledge/abilities with those atoms

- Assign dependencies between atoms

- Help us retain (and strengthen) our knowledge/skill over time.

- Define and measure "motivation" of learning/retaining (for any training system).

And, thus, if sufficient people use an atomic knowledge system, can we use statistics to:

- Find which teaching methods are more effective

- Find which assessment methods are more efficient

- Improve the dependency graph itself

- Measure the effectiveness of retention techniques

- Predict and optimize "motivation" over time.

And:

- Given a goal, suggest (and monitor) a path to achieve it

- When an atom of skill/knowledge is conquered, suggest one or more atoms to do next.

What is a knowledge dependency?

At the most gross level, I've seen this kind of dependency:

The subject CS101 is a pre-requisite for CS201. In order to sign up for CS201 you must have accreditation in CS101 or an equivalent subject.

But that's far from "atomic".

Atomic would be much smaller. And the dependencies are not necessarily "hard" dependencies.

I don't think it's always true to say "You can't learn Y until you've learned X". Our brains are very clever and can learn a lot even with incomplete information. The brain can fill in the gaps.

It might be better to say:

It is helpful to learn

Xbefore you learnY

or

Learning

XbeforeYwill give you a better chance of learningY

...where "better chance" can be defined in a way that is measurable. For example "better" might mean:

People who have learned X are:

- ...more likely to learn

Ymore quickly - ...more likely to complete assessments of

Ywithout quitting - ...more likely to achieve a mark of

P%on assessments ofY, within a timeframe ofT.

And "the system" could "automatically" learn these kind of things about people.

Whether they are true about a specific individual would remain to be seen. But a level of confidence could also be calculated "automatically".

The Principle of Atoms

Atoms are from an ancient Greek word meaning "un-cuttable".

They are the smallest imaginable unit. (Never mind that the physics of the real world has out-stripped the imagination of nineteenth century physicists. The things they called atomic did turn out to be cuttable, given a sufficiently large science budget, and enough angry scientists.)

The idea behind looking for "atoms" of knowledge is to divide and divide knowledge until it can't be divided any more. The very small pieces of knowledge that remain are useful because they can -- in theory -- be dealt with in a clean way. Atoms of knowledge can be taught quickly, assessed quickly, rearranged easily, and statistics about them can be gathered easily.

Contrast with what happens when knowledge is grouped into larger modules. You can't assess the module until the entire module is complete, so cycle time is longer. (Shorter cycle time leads to a system which can be improved dramatically faster, see)

If a module is not working (according to the stats) you can't tell which part of the module is the problem. You can only replace the module in its entirety (which is expensive) or tweak part of the module, and wait to see if they move the stats in the right direction.

Another common size for the units of learning is the "lesson", based on a class room or lecture hall lesson, and taking 30 to 90 minutes. This is also big enough to slow down the assessment. Instead a lesson should be thought of as a "molecule" made of many atoms put together in a specific way. Discovering the right atoms, and putting them together into the correct molecules is a big challenge. There is a combinatorial explosion of possibilities.

My main criticism of other learning systems is that, when they do take dependency graphs into account, they settle for very large grains. This is understandable in the short term, but fails to build a long-term resource that can be used for improving the overall learning system. (You can't make testable hypotheses about large scale pieces of knowledge)

A side-note on arrows

Skill Y depends on skill X. Do we write:

Y → X

or:

X → Y

This business of arrows is a tricky one.

What is it that "moves" in the direction of the arrow? Knowledge which moves from X to Y, or questions which Y "sends" to X.

I think it's more intuitive to say that:

X → Y

Skill X "leads to" Skill Y.

Or, if the system can have more than one type of arrow (or information about arrows) then labels can be attached to arrows:

X –(leads to)→ Y

If needed, there are many other arrows available in html:

Some favorites:

⇝ ↠ ↣ ↬ ↷ ⇁ ⇉ ⇏ ⇒ ⇛ ⇢ ⇴ ⇾

...which could each be put to special uses.

It has to be open and editable

For such a knowledge graph to be valuable it would need to be open source, probably licensed under a Creative Commons License, and be willing to accept pull requests from others (or permit them to fork the graph).

Support Materials

To be useful, an atom of knowledge would need to contain more than just a representation of the knowledge itself.

It may need:

- Examples

- Metaphors

- Illustrations

- "Why" — why is this knowledge valuable? What good is it? 1

- Sources

- History

There would also need to be many supporting tools to assist with the use of the knowledge graph. The tools should be separate from the graph itself. They operate on the graph, but the graph does not depend on the tools.

Ideally the knowledge graph would be encoded in a very simple system, and thus increasingly elaborate tools could build on top of this simple data. (This is based on a concept known as the rule of least power).

Resource Description Framework "RDF" is a somewhat widely used technology for similar systems. I am not sure if "very simple" would be an appropriate description. Looking at a comparison of triplestores and a list of graph databases seems like a necessary step when considering underlying technologies.

Retaining knowledge over time

A level of maintenance is required if you want to retain your skills.

Systems such as spaced repetition try to do this in an efficient manner.

There is a principle here:

knowledge (or skills) are far more valuable if they are retained.

It could be stated in a more extreme manner:

There is no point remembering something for a test and forgetting it afterward. Any system that encourages that behavior is worse than no system at all, as it wastes effort that could've been used for a beneficial purpose.

(This explanation has a dependency on the concept of opportunity cost)

If spaced repetition is used, and knowledge is atomic, you might think to ask: is an atom of knowledge the same as a single card (or fact) in a spaced repetition system. Sometimes yes... sometimes no.

Often, a single piece of knowledge should be explained by several cards. They may retell the same knowledge in different ways. It's really a technical decision related to how people store knowledge, and related to the most effective use of spaced repetition software.

For now just think of it like this: the model used should allow an atom of knowledge to be linked to by more than 1 spaced repetition fact.

Examples for 3 cards required for a new term:

- Word ↷ Definition.

- Definition ↷ Word

- "Cloze deletion" example. (may have several of these).

Meta example:

Consider some cards that explain the concept of "Cloze Deletion" (Let me use the nomenclature, "Front of card" ↷ "Back of card")

Card 1 (Definition)

- "Cloze Deletion" ↷ "A fill in the blank style of asking questions"

Card 2 (Definition)

- "A fill in the blank style of asking questions" ↷ "Cloze Deletion"

Card 3 (Example)

- "Questions with missing words are examples of ____ deletion" ↷ "Questions with missing words are examples of cloze deletion."

Even though we could have many cards on that one piece of knowledge, the knowledge fragment is what I would consider atomic. (But metrics could reveal if it is learned and retained faster if always agglomerated with other knowledge)

Each card is a photo of the same atom, taken from different angles, to build up a more complete picture.

Metacademy

The closest thing I've found to the knowledge graph part of this idea is "Metacademy" which looks really interesting.

It has a knowledge graph, it is open source and CC licensed. (Great, great and great).

I wouldn't quite say the pieces of knowledge are atomic. They are more like a "lesson."

There's a lot of fundamental knowledge assumed.

As a result the knowledge is many sets of disjoint networks rather than a single mighty network. This is just a literal network effect though (as more detail is added, the number of nodes would grow but so will the number of edges, as will the connectedness of subnets)

The bar for adding a piece of knowledge to the graph is quite high. I think I'd want that bar to be quite low. (Particularly for the first few thousand nodes)

I don't think there's any assessment built in, nor (consequently) any spaced-repetition.

Each item has:

- Summary

- Learning goals

- Prerequisites

- Resources

- See also

Resources can be referenced by multiple items and have:

- Title

- Authors

- Brief description

- Resource Url

- Resource Type

- Access (Free|Free with registration|Paid)

- Resource year

- Edition Years

- Extra notes

Motivation: Measure and Optimize

Fundamentally: How is motivation measured? How is it optimized?

Motivation — maintaining this should be inherent in the system and the metrics that drive it internally

No point having a perfect system if it’s too boring to use.

- Have to ask why they want to do it — why do the participants want to engage with it?

- Why is it engaging? What do each of the students consider fun on that day at that time?

- Do personality types exist (Ray Dalio thinks they do, I'm less convinced) but if they do exist, how should they influence a dynamical system's delivery of material?

Gamification

Principles that have become mainstays in gamification could be successfully employed in the system I'm imagining.

Knowledge.... or Skills?

I've used knowledge and skills fairly interchangeably throughout. What do I mean by this? And should it really be one or the other?

I think of them as somewhat interchangeable because:

I give a specific meaning to "knowledge" which separates it from "information" or "data" or "facts" and elevates it above them.

By knowledge I mean "applicable" or "useful" information. Information that has been sufficiently ingrained into your mesh of understanding and behavior that you will apply it at the appropriate time.

Here's an example.

Imagine an alien has arrived in human form on the earth. Her name is Trevor, though that's irrelevant. Let's imagine Trevor has previously learned the fact that sunlight is warm. She steps outside on a summer day and is surprised by the warmth of the sun. In such a case Trevor only had information or facts: she didn't have "knowledge". Knowledge is more like a skill: it is so internalized, so embedded within your world view that it is immediately recalled at the appropriate time. It may even become part of the "unknown knowns" — the things you have internalized to such an extent that you don't need to consciously consider them.

Information becomes knowledge (in my definition) when it can be applied, when it fits into a mesh of ideas, and when you are fluent at applying it.

There may be some neurological basis for this difference. Motor learning is initially performed by the cerebellum. Fully-ingrained habits make their way right down into the basal-ganglia, and allegedly do not involve any further activation of the cerebellum. I don't know how accurately I've stated that, or whether it's indeed true. The most engaging account I've read of this was in the book "The Power of Habit" by Charles Duhigg.

Consider the opposite

This article, Surfing Complexity, from Lorin Hochstein's ramblings about software, complex systems, and incidents, brings forward an interesting point:

Perhaps::

- To "know" "X", it is not at all necessary to know the "atoms of knowledge" on which "X" is based (and the atoms of knowledge on which those are based, ad. infinitum).

Indeed, perhaps to know and be able to apply as many "X", "Y", "Z" etc as are practically needed for a successful life:

- It is necessary that you do not (as you cannot and should not aim to) know and retain all of the atoms of knowledge which underpin "X", "Y", "Z" etc.

Criticism of the idea

I'll quote a comment from here in full; it is criticising Adam Zerner's "Rethinking Education" (posted to LessWrong), but that idea overlaps almost completely with what I've described above, so the criticism is equally valid here:

Given that I'm already involved in the creation of similar system (Docademy.com), I figure I've finally found a thread on here where I have something to contribute :).

On the Dependency Tree

First some thoughts on your concept of a dependency tree. I started out thinking this would be the way to go, but I quickly ran into the problem others have mentioned on this thread: There's no one dependency tree. When looking at concepts like basic math, it's easy to think so, but the concept falls apart when you try to come up with a dependency tree for World History, the dependencies depend on the individual teacher.

The elegant solution to this is to tag individual learning resources (such as a video or chapter) with dependencies. The system will never give you a learning resource that requires B1 to learn B2 if you don't know B1, but will serve up other learning resources that teach B2 WITHOUT needing an understanding of B1. This solves all the problem of your dependency tree solution, but has none of the down sides inherent in the rigidity of it.

On The Standardization of Education

I am also in the camp that the standardization of education is a net negative to society and the individual. My research shows that the most effective individuals are those who specialize in the things that they are good at, rather than trying to be well rounded.

However, that doesn't change the fact that there might be certain skills which would be generally good to have. In that case, once you've solved the problem of being able to measure each skill individually, it's trivial for companies and organizations to simply not accept people who don't have these general skills. This would work similar to how modern Applicant Tracking Systems work, but it would allow you to get much more fine grained with the skills, and would have the benefit of being in a standardized format, rather than trying to parse variously formatted resumes.

After that, the free market would take care of the rest. If a group of skills truly allowed people to be generally more effective, the companies that screened for these skills would outcompete the companies that did not, and eventually they would be generally required for someone who wanted to function in society.

On Standardized Tests

You correctly assume that it's important to be able to quickly identify if someone is good at a subject. However, the type of standardized tests you mention, those that can be easily parsed by a computer, are only appropriate for a small subset of skills. Consider the following examples:

- You correctly use expected value calculations when prompted to do so for a test, but completely fail to do so when making real life decisions.

- You know most of the established theory about how to write good fiction, but your actual stories are boring, uninspired, and not engaging.

- You know how to shoot a basketball, how to dribble, and how to pass perfectly. But when trying to combine these skills in a game, you can't execute.

Ultimately, what these examples show is that for many skills, knowing how to do them in a way that can be easily measured by a computer is different then being able to actually use them in the real world. Rather, a more effective way to quickly measure someone's merit in a particular skill would take a portfolio approach. Under each skill it would list:

- The learning resources they had used to learn that skill.

- The standardized tests they had completed in the skill.

- Real world projects they had completed using the skills.

- Testimonials from the people they had worked on those projects with, and the people they learned with, talking about theiir abilities in those skils

On Motivation

The final problem you mentioned is motivation. You mentioned two problems in motivation

- Kids don't know their prerequisites when trying to understand a subject.

This is fixed with the method above.

- Kids don't connect what they're learning with what they can do with it.

If you accept my premise above, that education shouldn't be standardized, I think you've actually got this backwards. If you look into the school models that are more self-directed, such as Waldorf, Montesorri, or Sudbury, you'll see that the students don't go looking for something to learn, then figure out how they can use it. Rather, they choose a goal, a project, or an experiment, then learn the skills they need in order to accomplish or create it.

Therefore this ideal school would have a list of goals, projects, and experiments to work on, which would be tied to skills. Students could choose the goal they wanted to accomplish (or create their own), and the system would suggest learning resources that would teach them the necessary skills.

I'd also like to add some of the other issues I see with motivation that you didn't mention.

- The lecture method of learning is low immersion and a poor way to keep students engaged.

To fix this, the main role of teachers would be helping students through completing their actual projects, and the teaching would happen more informally, as part of helping them complete the project. If it was a more in-depth subject for which the student needed a book or lecture, the student could request that, but it would never be FORCED on them.

- There's absolutely no incentive for good teachers that motivate students to use their skills; they get paid the same as the bad teachers, and can use the same skills for other jobs which are much higher paying.

If students are free to choose their own projects, one would assume that some of those projects will be profit generating. To solve this problem, you would allow teachers a small stake in every project for which a student used their skill, as a bonus on top of their regular salary. This would incentive them to motivate the students to use the skills they teach.

Anyways, I pretty much live and breath this stuff, and would love to discuss it more with anyone who's interested. If you're seriously interested in pursuing a venture that brings into reality the concepts I've discussed above, and see the implications for things like meritocratic voting, disrupting education, and disrupting hiring, feel free to PM on here and we'll talk.

Based on the comment above, here is a different approach: instead work to build an "Emergent Knowledge Graph".

To do this, find training materials and:

- tag the atoms that it mentions.

- including the order in which they are mentioned.

(Aside: the type of tags I mention here are what social scientists and "qualitative scientists" called "codes", and this type of tagging they call "coding"; but I call it tagging because coding means 'computer programming' to me)

In fact, tag every single mention. Will need deep addressing. e.g. in a video it would be the start and end time. In a book, the page and line number. In a webpage, the URL, subsection, and some selectors to get right down to it. e.g. the starting character. Something so that different mentions can be "ordered" - you can tell which comes before which.

If a mention is an explanation -- then tag that mention as an explanation. So the tags would have multiple attributes.

Given enough tagged mentions across enough learning material you can later determine (with limited confidence):

- "when explaining atom B, people usually mention but do not explain atom A."

- (a shorter explanation of that relationship is: "atom B relies on atom A.")

Also, you can say:

- "when explaining atom B and explaining atom A, people usually explain atom A first."

- (a shorter explanation of that relationship is: "atom B relies on atom A.")

May also tag a mention as being “example” or “historic fact” —

- An "example", i.e. "An example of A is provided."

- History lesson, "The history of A is provided here"

So there's a lot of tagging to be done on teaching materials.

It would hopefully be done on good teaching materials.

The highest chance of extracting accurate tags of dependencies would be performed on training material that is already known to be good material. Similarly, those might be the lowest value dependencies as they are already well known.

It's a bit of a paradox, a chicken and egg problem.

Emergence part II

As an alternative to that approach, or in concert with it:

Given a piece of educational content....

Tag the knowledge atoms that it:

- relies on

- attempts to teach

(As above.) That’s it. No more detail than that.

Then create assessments for students. They could be simple spaced repetition cards. Sometimes they might be worked examples. All you need to know about the assessment questions is that they rely on atoms A, B, and or C.

Now, you have to randomly assess the students before or after they "use" (i.e. study/engage with/learn from/tick off) any content.

From that you’ll build a probabilistic (or "fuzzy") picture of how effectively individual students learned or did not learn any atom.

(Observation about society here -- The thing we keep assessing is the students and the teachers: The thing we should be assessing is the learning material.)

What we could then learn is:

- What atoms did this material tend to reinforce (ie teach)

- What atoms did this material tend to rely on?

We learn what it relied on like this:

The students who knew X before-hand tended to do better at learning Y and Z than the students who did not know X before hand. Therefore the learning material has some functional (or "revealed") dependency on X.

On Zettelkasten

For personal knowledge, most graph-based ideas were pioneered by Niklas Luhmann in his Zettelkasten system. This system is described with lots of detail in https://takesmartnotes.com/ and made him extraordinarily productive.

Essentially, Luhmann had one small card per semantic unit. Cards had alphanumeric IDs. Cards backlinked to other cards using said IDs. He also used a card branching mechanism implemented in IDs as e.g. 123 -> 123a -> 123a1 which he called Folgezettel.

Lastly, he also had cards whose role was mostly to connect topics by serving as a link hub.

It's a really simple system that you can implement using plain text, Org, Markdown or some note taking application like Apple Notes or OneNote plus a few conventions.

After trying many things, for personal use I think nothing beats plain text (or a plain text format). I don't need a server, I can easily sync things, and it's really future proof.

I have also scaled this kind of setup to larger organizations, albeit using a more classical wiki-like approach (read longer articles instead of small semantic unit cards). For example, GitLab has excellent continuous integration. You can use an Emacs or Pandoc inside a Docker to export Org or Markdown files into an HTML.

from: nextos

Examples Convey The Meaning of Everything

I recently read two books on the collation of the Oxford English Dictionary. “The Meaning of Everything” and “The Dictionary of Lost Words”.

The dictionary was put together in a Scriptorium from millions of fragments, examples of usage of a word. Where it was used, the sentence it was used in. Examples were a more crucial input than definitions.

Words as Atoms of Knowledge

I was watching this 3blue1brown video on Large Language Models explained briefly and thinking of the way a token (e.g. a word) is vectorised, and then via attention, more specific context is added to it -- and I thought, well this is a bit like knowledge atoms.

Effectively, words themselves already are knowledge atoms. Or more broadly "terminology" is composed of knowledge atoms. As a starting set of knowledge atoms, you could use "vocabulary" -- and then, as the knowledge atoms that act as pre-requisites for any other knowledge atom, you could use "all the words in the definition of that term".

There are some problems with this approach, and there are some shortcomings.

Problems include:

- cyclical definitions.

- turtles all the way down

- multiple meanings for a term

Shortcomings include:

- not all knowledge is composed into "a word" (or "a term).

Others

- Learn Awesome: Computer Vision - learning map example from Learn Awesome

References

- Metacademy — "an interconnected web of concepts, each one annotated with a short description, a set of learning goals, a (very rough) time estimate, and pointers to learning resources. The concepts are arranged in a prerequisite graph, which is used to generate a learning plan for a concept."

- On Metacademy and knowledge graphs — "The graph itself reminds me of “skill trees” in MMORPGs"

- Mathigon — "The Textbook of the Future. Interactive. Personalised. Free."

- One Model — Record, manage and share any knowledge: fast, free and open. "A highly efficient knowledge organizer: a desktop tool for those who use lists, organized notes, emacs org-mode, mind maps, or outlines."

- Owleo — "learning graph"

- Find Lectures (offline now — Author info here)

- Learn Anything "Interactive mindmap for learning" — "Organize world's knowledge, explore connections and curate learning paths" — Discussion of learn anything at hacker news — Try "Learn Anything"

- WTF is a knowledge graph? — Jo Stichbury at hackernoon grapples with the topic of Knowledge Graphs, though these seem to be "related facts" not exactly knowledge as I'm defining it here.

- Golden: The Intelligent Knowledge Base — "Introducing Golden: Mapping human knowledge" — discussion here — golden at golden

- The Skill Tree Principle: An Innovative Way to Grow your Skills Efficiently — The SkillUp approach sees you pick three skills to advance each month, for the duration of that month, using deliberate practice techniques. Next month you might take those skills further or add new skills to your skill tree or work on a different skill from your skill tree.

- LessWrong: Rethinking Education — includes 87 comments; "I propose that we pool all of our resources and make a perfect educational web app. It would have the dependency trees, have explanations for each cell in each tree, and have a test of understanding for each cell in each tree. It would test the user to establish what it is that he does and doesn’t know, and would proceed with lessons accordingly.

In other words, usage of this web app would be mastery-based: you’d only proceed to a parent concept when you’ve mastered the child concepts." — one comment is quoted in full below - ASER: A Large-scale Eventuality Knowledge Graph — "...existing large-scale knowledge graphs mainly focus on knowledge about entities while ignoring knowledge about activities, states, or events, which are used to describe how entities or things act in the real world. To fill this gap, we develop ASER (activities, states, events, and their relations), a large-scale eventuality knowledge graph extracted from more than 11-billion-token unstructured textual data. ASER contains 15 relation types belonging to five categories, 194-million unique eventualities, and 64-million unique edges among them. Both intrinsic and extrinsic evaluations demonstrate the quality and effectiveness of ASER"

- Linked Data — "Linked Data is about using the Web to connect related data that wasn't previously linked, or using the Web to lower the barriers to linking data currently linked using other methods."

- Microsoft Academic Knowledge Graph (MAKG) — a large RDF data set with over 8 billion triples.

- Wikipedia: Google Knowledge Graph

- Developer Roadmaps (discussed here) — "Step by step guides and paths to learn different tools or technologies" current roadmaps cover "frontend", "backend", "devops"

- School Yourself: Algebra — "Free interactive lessons from award-winning Harvard instructors"

- Contextualise — (discussed here) "Contextualise is a simple and flexible tool particularly suited for organising information-heavy projects and activities consisting of unstructured and widely diverse data and information resources"

- Developer Roadmaps: Backend — "Step by step guide to becoming a modern backend developer in 2022" - I quite like the way this has been put together, including the icons for icons for "Alternative option" and "Order in roadmap not strict". See the other roadmaps they have, from here https://roadmap.sh/roadmaps

- wikipedia: antimatroid

- skill-trees: Tasshin

- Piaget’s Theory of Cognitive Development

- wikipedia: experiential learning

- Youtube: Large Language Models explained briefly: from 3Blue1Brown

See also

-

I've read that, in studies done in the US, Women were more likely to engage with computer science material when it was put in a context that highlighted its practical value, whereas Men were more willing to accept the material on a purely abstract basis. I'm not making claims about the causes of this difference. I read this in the book "Coders" by Clive Thompson. He spoke highly of the study's authors, and I take his word for it. He seems very clever. I was pretty worried going into his book, but was more and more reassured by his writing the further in I went.↩